October 25, 2021

Kevin Fisher is AVAC’s Director of Policy, Data and Analytics.

Measuring the impact and meaning of advocacy can be difficult. Often goals are achieved or real progress is made, but documenting the role of advocates in those successes can be a challenge. At the same time, campaigns may not achieve every goal to change policies, fund programs or pass legislation, but still have advanced an issue, broken new ground or taken important steps. Those evaluating campaigns may not be able to show the impact of advocacy or see the signals of progress. Yet it’s critical to assess strategies in real time. AVAC has attempted to maintain a close eye on its work, gauging both success and areas where more work or different thinking is needed.

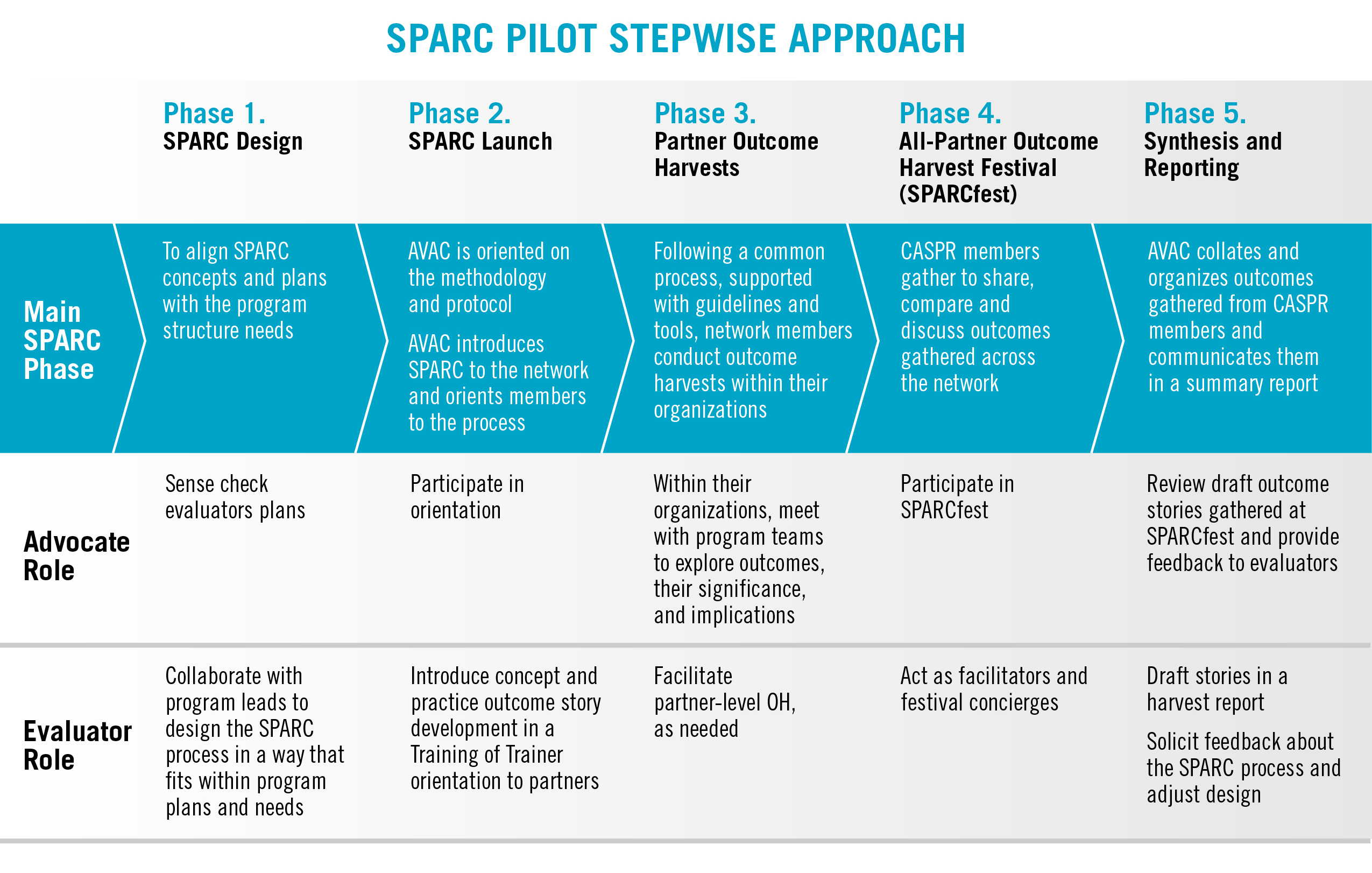

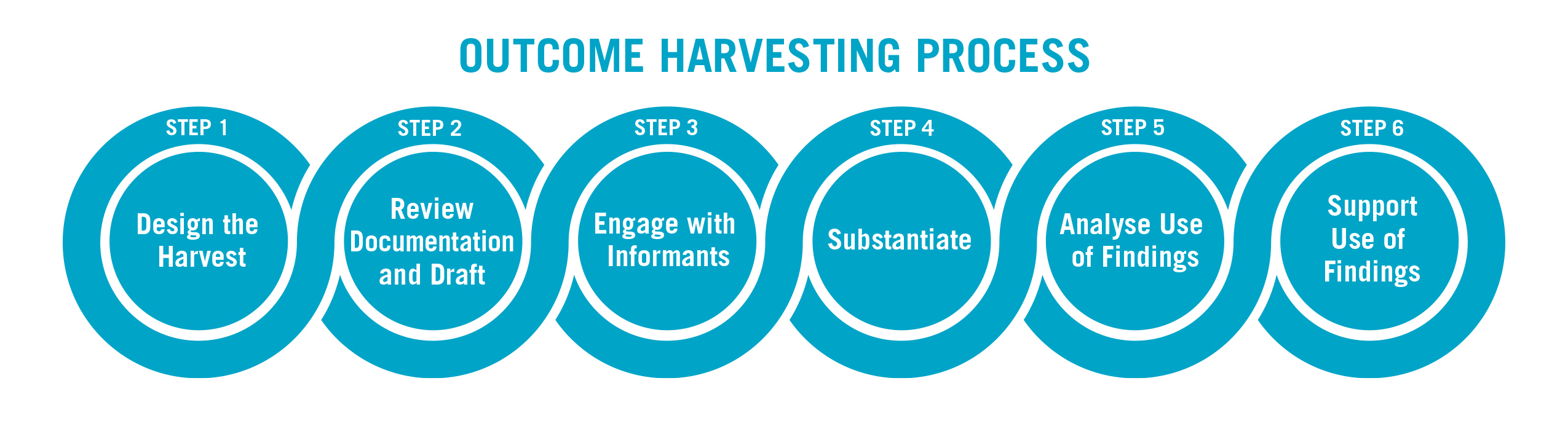

Through the Coalition to Accelerate & Support Prevention Research (CASPR), designed by AVAC in collaboration with key partners and supported by USAID, we created an evaluation tool entitled Simple, Participatory Assessment of Real Change or SPARC. It’s a participatory system designed to identify outcomes and place advocates at the heart of the evaluation process. As used in CASPR, SPARC harvests “outcome stories” from partners, reviewing these stories across the CASPR network in sessions dubbed a SPARC-fest. Fellow advocates hear the stories, provide feedback, ask questions and test ideas. It is, in a way, a system of peer review for advocates.

Former AVAC staffer Jules Dasmarinas and consultant Rhonda Schlangen discuss AVAC’s SPARC program in a special issue of New Directions for Evaluation, titled Pushing boundaries: Advocacy evaluation and real-time learning in an HIV prevention research advocacy coalition in sub-Saharan Africa.

Why SPARC?

In 2016, AVAC received funding from the US Agency for International Development (USAID) to develop and implement the CASPR program. CASPR began with its own monitoring evaluation and learning (MEL) system to document outcomes and track indicators of progress. But given the breadth of CASPR partners, AVAC sought a more participatory and inclusive approach that would capture nuances and share lessons for success. SPARC grew from this impulse. SPARC provides a platform for all CASPR members to identify signs of progress and frame outcomes across the Coalition’s priorities. SPARC enables CASPR members and AVAC project staff to see themselves as active contributors in the evaluation process. SPARC demystifies evaluation, expands the discourse, cultivates innovation and informs the advocacy.

As Jules and Rhonda found, SPARC highlighted the following:

- Participants are connected through shared goals and are incentivized to invest in developing relationships and trust. This enables SPARC participants to build off a common foundation and use the process to develop a more nuanced and useful analysis of their collective progress.

- SPARC is integrated into forums that network members use to collaborate and plan their work. This enables SPARC to seamlessly feed into planning, which is actionable learning. By demonstrating immediate application and benefits, SPARC is less likely to be stigmatized as an evaluation process.

- Program managers intentionally calibrate SPARC to integrate and balance with other demands on network participants’ time through exploratory conversations with evalu- ators.

- Evaluators take a “work ourselves out of a job” approach to ensure focus is on sup- port and facilitation of substantive participation. Calibrating evaluators’ role with an eye toward expanding ownership of network members enables evaluators to identify opportunities to support SPARC processes, such as categorizing outcomes that partners may not have time or feel well equipped to conduct.

Where to, SPARC?

Advocacy programs often face erratic funding and questions about their value. Rigorous and multiple evaluation strategies for the advocacy field are important for both prioritizing effective work, but also making the case for supporting advocacy. New advocacy evaluation tools, like SPARC , help advocates show the pace of progress and real impact on their way to achieving major goals. By design, SPARC is a dynamic approach and can be adapted to other coalitions or movements that aspire to learn from all voices in the evaluation process. One AVAC partner, PZAT has an initiative adapting SPARC to its work with the COMPASS Africa project, and AVAC is working with PZAT and others as we update our overarching agenda for monitoring, evaluation and learning across all that we do.